In a recent series of blog posts, I drew up how to go from a basic StackStorm installation and pack deployed using Ansible, to moving that entire custom deployment to a one-touch cloud deployment using BitOps while only modifying a handful of files in the process.

We only barely scratched the surface of our implementation, and we can always go further. One aspect I tried to be mindful of throughout the guides was the various ways of managing of secrets. In this guide we will detail how we can utilize an AWS Key Management Service (KMS) key as well as AWS Systems Manager (SSM) and Parameter Store to take that a step further.

In practical terms: we'll create a BitOps before hook script we use to retrieve all of the secret variables we need from AWS, and demonstrate how we can load those into the BitOps container environment for usage within our existing Ansible StackStorm playbook.

If you want to skip ahead, you can view the final code on Github.

This is an addendum in a StackStorm tutorial series:

- Part 1: DevOps Automation using StackStorm - Getting Started

- Part 2: DevOps Automation using StackStorm - Deploying With Ansible

- Part 3: DevOps Automation using StackStorm - Cloud Deployment via BitOps

- Part 4: DevOps Automation using StackStorm - BitOps Secrets Management

This article assumes that you have completed or read over and have some familiarity with the previous articles, as this blog will serves to expand on the concepts and code previously developed. If you want to jump start yourself and just dive in here, just grab the files from the GitHub for the previous article.

To finish this tutorial you will need:

- npm

- docker

- A GitHub account with personal access key

- An AWS account with an aws access key and aws secret access key

If your AWS account is older than 12 months and you are outside of AWS’ free tier, this tutorial will cost $0.0464 hourly as we will deploy StackStorm to a t2.medium EC2 instance. There is an additional fee for the use of Systems Manager, however there is no fee for using Parameter Store as our requests will be low and we are not using advanced parameters.

Can You Keep a Secret?

A typical process with secrets is to manage them separately from the deployment process by using a tool to save a secret value into a secrets store from a local machine. This keeps the actual secrets far from the checked in config, and then it's just a matter of passing around secret names.

If you haven't joined us before we'll need to quickly set up BitOps which we can clone from GitHub:

npm install -g yo

npm install -g @bitovi/generator-bitops

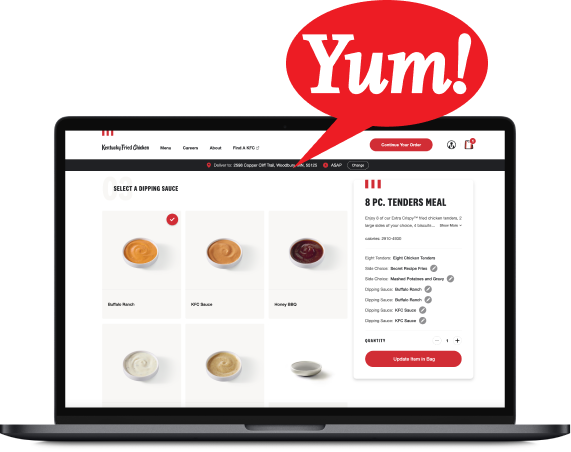

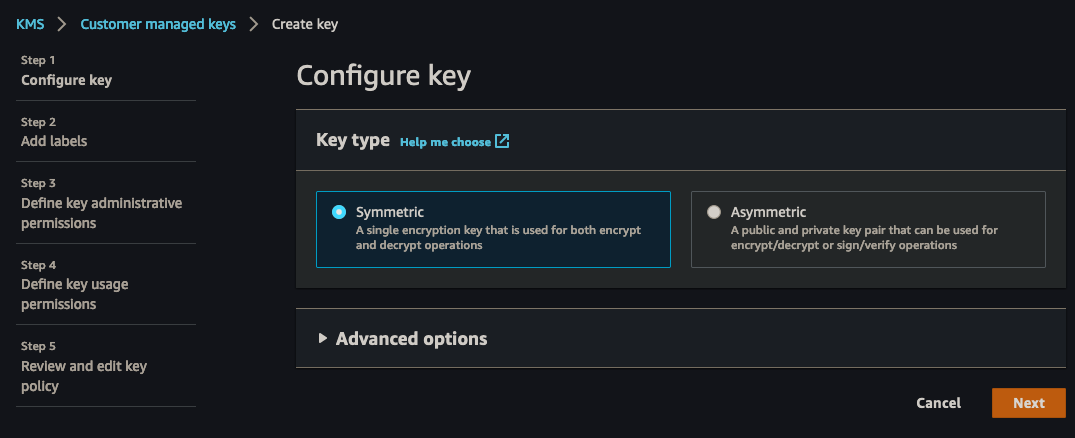

yo @bitovi/bitopsNext we'll need to create a KMS key which is simple enough to do via click-ops, as the only thing we care about is the name and the resulting ID of the key after creation. You'll want a symmetric (default setting) key:

Make sure you grant your AWS user access to the key!

There are also methods for generating the key via the shell as well if you have the aws package installed and configured:

aws kms create-key --description "ST2 - BitOps KMS key"{

"KeyMetadata": {

"AWSAccountId": "MYAWSACCOUNTID",

"KeyId": "b5b59621-1900-5bas-71bn-abochj4asd14",

"Arn": "arn:aws:kms:us-west-1:xxxxxxxxxxxx:key/b5b59621-1900-5bas-71bn-abochj4asd14",

"CreationDate": 167681234.239,

"Enabled": true,

"Description": "ST2 - BitOps KMS key",

"KeyUsage": "ENCRYPT_DECRYPT",

"KeyState": "Enabled",

"Origin": "AWS_KMS",

"KeyManager": "CUSTOMER"

}

}The only part we're really interested in is the KeyId as we'll need to know where to direct our parameters we want to write to the SSM datastore.

Now, all we have to do is just add our secrete to the parameter store, in this case it's a GitHub password token:

aws ssm put-parameter --name "/st2-bitops-test/secret/github_token" --value "wL/SK5g37dz6GqL07YEXKObR6" --type SecureString --key-id b5b59621-1900-5bas-71bn-abochj4asd14 --description "GitHub key for custom st2 pack repos"A few notes:

- We set

nameto/st2-bitops-test/secret/github_token, while this could be any name, its a good idea to start thinking of our structure early. We may have values in parameter store that don't belong to this repo, or others that are not necessarily secrets. - We define

--type SecureStringto encrypt our token in Parameter Store, otherwise it would be simply stored as plaintext. - Most importantly we assign our

--key-idb5b59621-1900-5bas-71bn-abochj4asd14which is what allows access to our KMS store. It's important that our KMS key, Parameter Store, and ec2 instance all exist within the same zone in AWS, as well as your AWS account has been granted access to the KMS key.

Pop and Lock

We have our value in AWS' Parameter Store, but how do we call that into our BitOps container for use in our Ansible playbook?

One option could be to utilize the available Ansible community module for manipulating AWS KMS stores and call our variables directly in the playbook. However, this idea is limited in application as it means our values are only available from AWS. By using BitOps, we can draw secrets from several different places or perform pre-run scripts to gather the latest output from an API before running our playbook.

A more flexible way to approach this is to make further use of the BitOps lifecycle scripts. Using lifecycle before & after run scripts, we can create scripts that export the values to the BitOps container at large before executing our Ansible playbook by utilizing extra_env, a config file BitOps looks for within its Ansible tool directory at /operations_repo/ansible/extra_env.

Using lifecycle scripts allows us to use whatever language we want to interface with whatever parameter store we may use. We are using Parameter Store for this blog, however these principles can apply to Microsoft Azure Key Vault, Google Cloud Secrets Manager, or even a local bespoke API.

Here, we'll use a basic python script for collecting a secret for our operations repo from AWS and outputting it to extra_env:

st2-bitops-test:

└── _scripts

└── ansible

└── get-aws-ssm-var.py (secret)import os

import sys

import boto3

import botocore.exceptions

ENVROOT = os.getenv('ENVROOT')

ENVIRONMENT = os.getenv('ENVIRONMENT')

ssm = boto3.client("ssm")

secret_name = ""

output_file = "extra_env"

def get_ssm_secret(parameter_name):

return ssm.get_parameter(

Name = aws_secret_path,

WithDecryption = True

)

if __name__ == "__main__":

try:

secret_name = sys.argv[1]

except IndexError as exception:

print("Error - InvalidSyntax: Parameter Store variable to look up not specified.")

else:

if secret_name is not None:

aws_secret_path = "/{}/secret/{}".format(ENVIRONMENT,secret_name)

try:

secret = get_ssm_secret(aws_secret_path)

secret_value = secret.get("Parameter").get("Value")

with open(ENVROOT + "/ansible/" + output_file, "a+") as f:

f.write(str.upper(secret_name) + "=" + secret_value + "\n")

print(secret_name)

except botocore.exceptions.ClientError:

print("Error - ParameterNotFound: Invalid value, or parameter not found in Parameter Store for this region. Check value name and delegated access.")

This script is called during /ansible/before-deploy.d/my-before-script.sh with a single variable name that has been previously stored in Parameter Store and appends the secret in the format of SECRET_NAME=secretvalue, to a new line in extra_env while doing some basic error handling in the process.

st2-bitops-test:

└── ansible

└── bitops.before-deploy.d

└── my-before-script.sh#!/bin/bash

echo "I am a before ansible lifecycle script!"

# Dependency install

pip install setuptools boto boto3 botocore virtualenv

# Get our vars from SSM

python $TEMPDIR/_scripts/ansible/get-aws-ssm-var.py github_token

python $TEMPDIR/_scripts/ansible/get-aws-ssm-var.py secret_password

Withextra_envin place, what will happen at container execution time is:

- The

/ansible/before-deploy.d/scripts will run, writing to theextra_envfile extra_envwill be looked for by BitOps, and when found, sourced to the container's environment,- Whereafter it can then be called within our Ansible playbook using

{{ lookup('env','ST2_GITHUB_TOKEN') }}.

For multiple variables we can simply call our basic script multiple times. As our application expands we may want to update our variable fetching script to take in a list of arguments, however the key to remember is to start small!

What we should see when Bitops executes our playbook is our custom ST2 packs that previously had been developed being installed with our Github token pulled down from Parameter store:

TASK [StackStorm.st2 : Install st2 packs] ************************************

changed: [localhost] => (item=st2)

changed: [localhost] => (item=https://dylan-bitovi:wL/SK5g37dz6GqL07YEXKObR6@github.com/bitovidylan-/my_st2_pack.git)

changed: [localhost] => (item=https://dylan-bitovi:wL/SK5g37dz6GqL07YEXKObR6@github.com/dylan-bitovi/my_st2_jira.git)

If you've made it this far, there may be some questions about the approach demonstrated up until this point in relation to security. Under normal circumstances, writing files that contain protected values, or exporting those values to the environment at large should be avoided. However, since this is all running within our BitOps container which will be destroyed upon completion, we have a bit more leniency in this regard. The crucial element is that we are not committing the secrets to our repo itself.

Time Capsules

What we've done with these scripts is develop a flexible way that allows us to utilize a secure persistent store for the keys to our application. We can utilize this same script and approach for any new before scripts we develop that tie into our BitOps operations repo.

The previous guide had used a method of passing in BitOps environmental variables directly, however this does not scale well for very long and makes our docker container execution command more cluttered.

By moving these values up to Parameter Store we now have a secure & centrally managed platform for all of our instances to refer to which can scale along with our instances. Should we wish to move to a blue-green style of deployment we have centralized one of our required data structures to make those advancements easier. What are only small savings or efficiencies now, will pay off huge dividends later.

In other words, we now have a single source of truth that can be updated and referred to by all deployments of our application!

If you have further questions or suggestions, please reach out and become new members in the StackStorm Community Slack, or drop us a message at Bitovi!

Work With Us

We collaborate with development teams on deployment automation, resiliency, observability, and infrastructure migration and management. We’re happy to assist you at any time in your DevOps automation journey!

Click here to get a free consultation on how to tackle your biggest StackStorm problems.

Previous Post