AI can be transformative, but it can also fail spectacularly if user experience and real-world needs aren’t top priorities. Sometimes, the biggest danger is what you don’t see: overconfidence in AI’s novelty, untested assumptions, and a fuzzy plan for how users will adapt. Below, we’ll explore the six biggest ways AI projects go off the rails, along with strategies to mitigate risk and apply a rigorous process to your next AI initiative.

How to fail at AI

Tech for tech’s sake

If all you have is a hammer, every problem looks like a nail. Many companies treat AI as a universal solution, hoping it will solve everything from user engagement to operational bottlenecks. Projects often get green-lit because someone says, “We need AI,” instead of, “We have a specific user problem that AI can solve.” That’s how LinkedIn ended up rolling out irrelevant, sometimes comically bad AI-driven prompts—diminishing trust on a platform that thrives on professional authenticity.

When teams jump on the AI bandwagon just for the hype, they skip critical questions like “Which users does this feature serve?” and “Is this the best tool for the job?” The result is an expensive, high-profile failure that nobody benefits from.

Key way to fail: Focus on what’s trendy, not on what’s actually needed. Skip pesky “business needs” and “user research” and jump right into an AI project.

Poor market fit

Just because an AI concept exists doesn’t mean users actually want it. Microsoft’s Cortana tried to stand out against Siri and Alexa, but the market was already saturated with services that had a built-in ecosystem advantage. Lacking a clear differentiator, Cortana never resonated strongly with users, leading to repeated pivots and eventual scale-backs.

An AI-driven product without genuine demand or value ends up as a burden on your team. You might sink countless hours into adding features to “catch up” with competitors, rather than carving out a unique proposition that fulfills a real need.

Key way to fail: Ignore user research and the market landscape. Launch a “me too” AI feature with no unique value, hoping users will magically adopt it.

Unlimited scope

Boiling the ocean with AI means trying to solve every problem, often before you’ve solved even one. Some enterprise AI projects aim to build massive, end-to-end solutions from day one—only to discover they need specialized hardware, endless data pipelines, and constant strategy shifts. These moving targets lead to stalled timelines, ballooning budgets, and a mountain of half-finished code.

In a rush to deliver “the ultimate AI platform,” teams can overlook the importance of incremental releases, user feedback loops, and phased rollouts. Without constraints, your project risks becoming an unmanageable beast that never provides real, measurable value.

Key way to fail: Avoid defining a core problem to solve. Let project scope expand endlessly, and keep adding new features before you’ve delivered anything concrete.

Lack of trust

Even the most advanced AI can flop if people don’t trust it. IBM Watson for Oncology promised groundbreaking medical insights, but when hospitals and doctors couldn’t see the logic behind its treatment recommendations, skepticism grew. When they tried to make it learn their hospital-specific protocols, they couldn’t see any improvement, furthering that skepticism.

Without transparency and the ability for some level of user control, AI may look like a black box that makes arbitrary choices. That’s a recipe for user pushback—especially in sectors like healthcare, finance, or security, where clarity and trust are paramount.

Key way to fail: Treat AI like a magic black box. Keep assumptions hidden from users, and never explain how decisions are made.

Garbage in, garbage out

AI is only as good as the data feeding it. Amazon’s AI hiring tool famously absorbed historical biases and ended up filtering out qualified female applicants, because the system “learned” from biased hiring patterns of the past. If your data is skewed, incomplete, or poorly labeled, the AI’s conclusions and recommendations will be, too.

Data validation is time-consuming but non-negotiable. Failing to review the dataset’s accuracy or potential biases means you could deploy features that undermine your own goals, alienate users, and even cause legal liabilities.

Key way to fail: Skip data validation. Assume all datasets are perfect, no matter where they come from.

No change management

While all projects benefit from effective change management, AI projects often benefit more than most. Adopting AI often requires completely rethinking workflows, roles, and cultural norms within an organization. If leaders don’t communicate a clear vision or offer enough training, employees may resist or misuse the new tools. In large companies, entire departments can dig in their heels if they see AI as a threat to their job security or routines.

Ignoring the human side of AI adoption leads to low morale, confusion, and limited usage of your fancy new system. Without structured change management, like phased rollouts, well-documented guidelines, and strong executive support, AI can become shelfware that nobody fully embraces.

Key way to fail: Implement AI overnight with minimal communication and zero training. Let everyone fend for themselves, and don’t bother measuring how things are going with the rollout.

Sound familiar? If your AI adoption is going off the rails, you need our AI Agents Workshop. We’ll help you adopt AI sustainably and identify key focus areas in just a few hours.

How not to fail at AI

Building AI systems that actually deliver value involves more than plugging in an algorithm. At Bitovi, we emphasize thorough planning, goal setting, and risk mitigation throughout the lifecycle.

Risk mitigation

Leveraging insights from user research, data audits, and technical feasibility checks, we identify risky assumptions early, before they derail development. We like to break big ideas into smaller pieces so we can estimate accurately, test rigorously, and adjust quickly if the data or user feedback doesn’t align with the initial plan.

Addressing risks head-on (like data bias, market misalignment, or stakeholder pushback) means your AI project is more likely to stay on track, deliver results, and adapt to shifting needs.

Scientific Approach

Part of mitigating risk is knowing how confident you are in any assumption or plan. If your confidence is below about 80%, that represents significant uncertainty—so isolate that piece, form a hypothesis, and run a time-bound test to see if your assumption holds up. By gathering real data and user feedback, you reduce the unknowns and push your confidence closer to 100%.

This “test and learn” cycle fosters continuous improvement rather than a single “big bang” release. If the test data contradicts your assumption, you can pivot quickly. If it supports your hypothesis, you reintegrate the findings into your AI project and move on to the next challenge.

Bitovi’s Practical Approach to AI

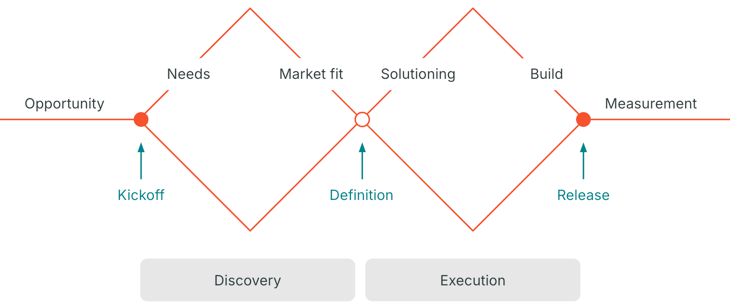

Bitovi’s process tackles the pitfalls of AI by focusing on defining the project first, and then iterating solutions quickly. Our process combines alternating rounds of divergent thinking (where we’re coming up with ideas) and convergent thinking (where we’re evaluating those ideas and refining them to the best course of action).

-

Assess the opportunity: Research business and user needs to identify what we could do and ensure we know who it’s for and what they need.

-

Ensure market fit: Refine our ideas against competitive research, build out our goals and objectives, identify the biggest impact we can make, and clearly define the problem we’re going to solve.

-

Solution planning: Ideate ways that AI can solve our problem and test different approaches to measure their viability.

-

Build it!: Starting with prioritizing things by breaking things down into tasks, then getting into the heavy lifting of coding and designing interfaces, integrating with data sources and AI tools, and of course, setting up ways to measure the objectives we’ve defined.

This framework ensures that any AI development is laser-focused on delivering practical, high-value outcomes while avoiding the dreaded “fail by ignoring UX” pitfall.

Conclusion

Failing at AI often boils down to ignoring the user and rushing headlong into untested assumptions—whether it’s believing the market automatically wants your new feature, skipping data checks, or refusing to plan for the human side of adoption. By contrast, successful AI marries technical innovation with rigorous UX research, market validation, and iterative improvements.

When you combine that with risk mitigation, a scientific approach to managing uncertainty, and a culture of change management, you’re far more likely to deliver AI that genuinely improves people’s lives. That’s how you not only avoid catastrophic failure but actually win with AI.

Ready to take on a thoughtful, user-centric path to AI success? Our AI Agents Workshop will give you an expert starting point on data strategy, user validation, risk mitigation, and more in just half a day.