Defining infrastructure as code repositories to handle complex systems with automated pipelines is difficult to manage at scale. BitOps is a solution to describe your infrastructure and the artifacts deployed onto that infrastructure for multiple environments in a single place called an Operations Repo. It was created and open-sourced by Bitovi.

This is part 1 of a BitOps tutorial series:

Features

Flexible Configuration: Configure how you want BitOps to deploy your application with environment variables or yaml.

Event Hooks: If BitOps doesn't have built-in support for your use case, execute arbitrary bash scripts at different points in BitOps’ lifecycle.

Agnostic Runtime: By bundling infrastructure logic with BitOps, you can have the same experience regardless of which pipeline service runs your CI. You can even run BitOps locally!

How it Works

BitOps is a boilerplate docker image for DevOps work. An operations repository is mounted to a BitOps image's /opt/bitops_deploymentdirectory. BitOps will

- Auto-detect any configuration belonging to one of its supported tools

- Loop through each tool and

- Run any pre-execute lifecycle hooks

- Read in configuration

- Execute the tool

- Run any post-execute lifecycle hooks

Operations Repository

An operations repo is a repository that defines the intended state of your cloud infrastructure. An operations repo is mounted as a volume to the BitOps Docker image and BitOps works its magic!

docker pull bitovi/bitops

cd $YOUR_OPERATIONS_REPO

docker run bitovi/bitops -v $(pwd):/opt/bitops_deployment

The structure of an operations repo is broken down into environments, deployment tools, and configuration.

Environments

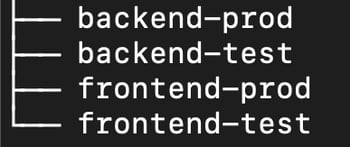

An environment is a directory that lives at the root of an operations repository and is used to separate applications and/or environments. For example, if your application has a backend and frontend component for production and test, the root of your operations repo may contain a directory for each.

The directory per environment pattern is preferential to having a branch for each environment as this allows the state of all your infrastructure to be managed from one location without accidentally merging test config into your prod environment.

When running BitOps, you provide the environment variable ENVIRONMENT. This tells BitOps what configuration code it should use. In more complex examples where you have multiple components or environments that are dependent on each other, you can run BitOps multiple times in a single CI/CD pipeline by calling docker run with a different ENVIRONMENT value each time.

For example:

docker pull bitovi/bitops

# Deploy backend

docker run \

-e ENVIRONMENT="backend-test" \

-e AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID \

-e AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY \

-e AWS_DEFAULT_REGION="us-east-2" \

-v $(pwd):/opt/bitops_deployment \

bitovi/bitops:latest

# Deploy frontend

docker run \

-e ENVIRONMENT="frontend-test" \

-e AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID \

-e AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY \

-e AWS_DEFAULT_REGION="us-east-2" \

-v $(pwd):/opt/bitops_deployment \

bitovi/bitops:latest

Deployment Tools

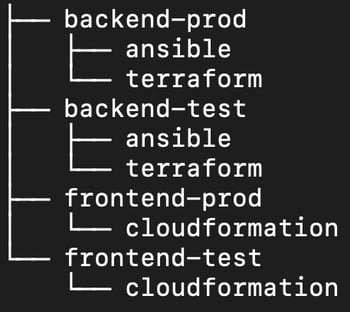

Within each environment directory are sub-directories grouping deployment tools by their names. Each of these deployment tool directories are optional. For example, if your application only requires Terraform to execute, you do not need an ansible/, cloudformation/ or helm/ sub-directory in your environment directory.

Continuing with our frontend and backend example, if your frontend only requires Cloudformation, but your backend requires both Ansible and Terraform, you would have an operations repo that looks like this.

Configuration

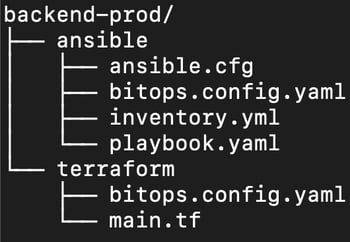

Within each deployment tool directory there is your actual infrastructure as code. Each deployment tool directory is structured simply according to what the tool expects. For example, the terraform sub-directory should contain files just as Terraform would expect them to be.

Along with the standard contents of a tool's sub-directory, there is a special file called bitops.config.yaml. This file tells BitOps how to run your infrastructure code. More information about bitops.config.yaml can be found in the official docs.

To create your own operations repository, look to the official docs.

Combining BitOps with an Application Repo

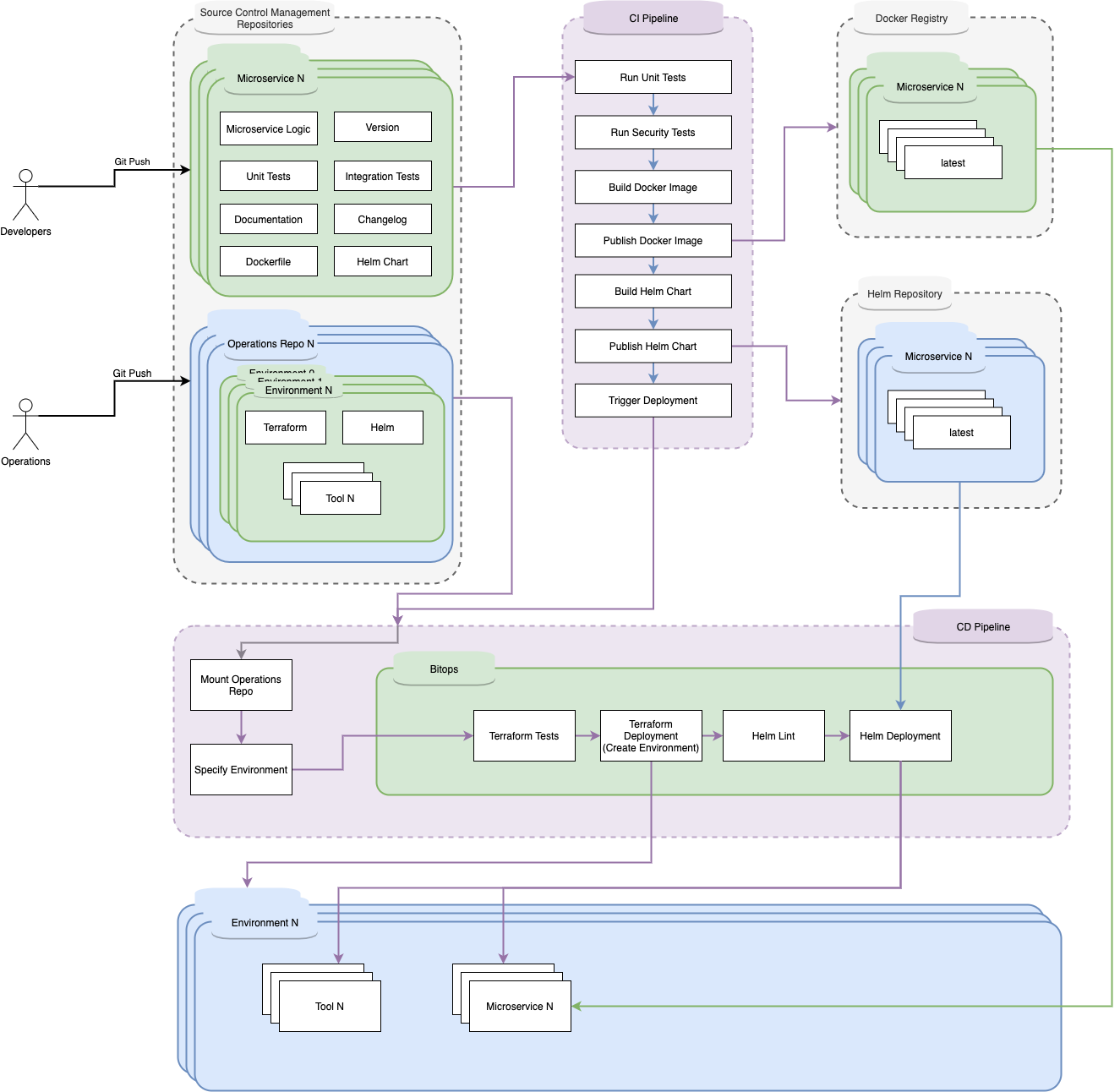

Combining a BitOps operations repository with an existing application repository creates a deployment flow where the application repository’s pipeline is responsible for producing an artifact and notifying the operations repository. The operations repository will create the necessary infrastructure and deploy the artifact to it.

Here is an example of an application that is deployed to a Kubernetes cluster with Terraform and Helm.

Learn More

Want to learn more about using BitOps? Check out our github, our official docs, or come hang out with us on Slack #bitops channel! We’re happy to assist you at any time in your DevOps automation journey!